OpenAI's social network would be so boring

Plus: Vibe coders vulnerable to slopsquatting; an Online News Act for machine learning.

Hello. Sorry to be a killjoy, but those TikToks where Chinese manufacturers claim to be the true source of your favourite luxury goods are likely bogus.

Accounts have been claiming to be manufacturers for everything from luxury handbags to Lululemon yoga pants, and revealing how these products are actually made. But there’s numerous signs that these particular companies aren’t legit. Besides the fact that these posts would likely tick off major clients, some of these accounts’ other videos are not talking about being middlemen for luxury brands, but for sites like Temu, Shien, and AliExpress — sites not exactly known for quality.

The idea that these accounts are putting forward are actually pretty sound: a lot of expensive products are made by third-party manufacturers and heavily marked up. But if you’re looking to these specific accounts to snag a deal, you’ll probably be disappointed with the results.

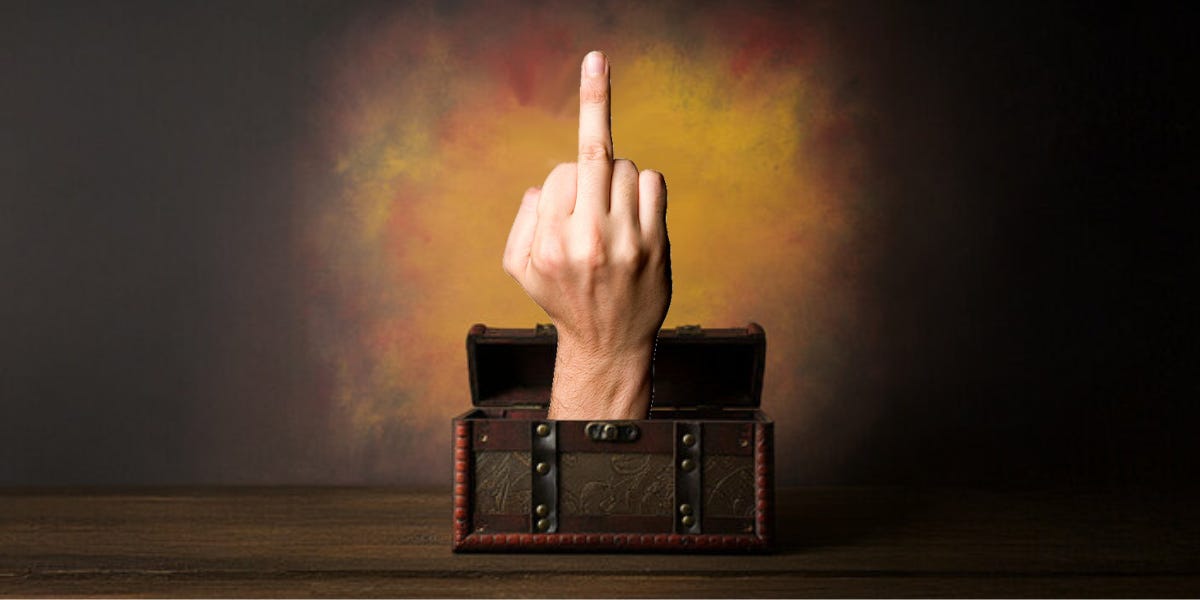

OpenAI’s plan to monetize memes: create a social network

It would probably be more like 4chan than X or Instagram.

According to The Verge, OpenAI is building its own social media network. The project is still in early stages, and may never be released, but CEO Sam Altman is privately soliciting feedback about a prototype for the network, which would be tied to the company’s AI image generator.

Why it’s happening: The Verge’s report puts out the idea that a social network would give OpenAI a direct source of user posts and data to train future AI models on, as Meta and X have started doing. But OpenAI probably also sees this as a potential (and desperately needed) source of revenue.

OpenAI says it has had big user boosts from its image generator, but that kind of viral engagement is hard to sustain and monetize. Putting more features behind a paid membership is a risky strategy because “give us money so you can post a meme” isn’t the most effective sales pitch. But a social network could monetize that engagement with ads — as other companies do — and also keep those images on its platform, instead of having them shared elsewhere.

Who wants this?: The report likens the OpenAI social network to X or Instagram. But the more apt comparison is probably niche image boards like 4chan or 9gag: a feed of low-effort, barely entertaining memes being shared over and over, except instead of rage comics and soyface, it would be AI-generated action figures and “draw X in the style of Y” images that help further boil the planet.

Or, it could just get really weird: Facebook and Instagram Reels have already become dumping beds for increasingly weird and nonsensical AI-generated images and videos, offering a potential preview of the kind of slop that could come from more direct AI integration.

IN OTHER NEWS

U.S. judge rules Google illegally monopolized ad tech. The decision said Google unlawfully tied its publisher-side platform to the exchange used to buy digital ads. Potential remedies could include forcing the company to break up its advertising business, or impose restrictions such as prohibiting Google from prioritizing its own services. Google plans to appeal the ruling. (TechCrunch)

Nvidia’s CEO makes a surprise trip to China. A state media outlet said Jensen Huang went to Beijing on the invitation of a Chinese trade organization. While there, he also reportedly met with met the founder of AI developer DeepSeek to discuss chip designs that would not trigger new restrictions on exporting GPUs. (Financial Times)

Astronomers find (potential) signs of life on a distant planet. The James Webb Space Telescope found dimethyl sulfide and dimethyl disulfide in the atmosphere of a planet orbiting a star 124 light years away. As far as scientists know, those molecules are only produced by phytoplankton and other marine microbes. The discovery is not proof of life, however: the research team said more observations were needed to confirm the presence of the molecules, which could also be made in non-biological ways they are simply not yet aware of. (The New York Times)

Vector Institute hires new president and CEO. Glenda Crisp takes over leadership of Canada’s top AI research lab from Tony Gaffney, who move into a new special advisor to the chair role last month. Crisp’s experience includes time as head of data analytics at Thomson Reuters and chief data officer at TD Bank. (BetaKit)

Should the Online News Act cover AI?

A report suggests the only way outlets will get fair value for training data is to force developers to negotiate.

McGill University’s Centre for Media, Technology and Democracy published a report analyzing the implementation and aftermath of the Online News Act. But one section looking towards the future suggests the Act should be expanded to compensate news outlets for developers using their content to train and power AI.

The Act’s main provision — to force tech companies to bargain with news outlets — is the fairest and most transparent method to determine fair value for news content, according to the report.

News outlets have also reported that web traffic is down due to AI, whether it be people turning to chatbots to answer questions, or AI search summaries stopping users from clicking through to stories.

Background: The Online News Act is best thought of as a Plan B to deal with Google and Meta’s market control over digital advertising.

This is a bit of an oversimplification of how automated online advertising works, but Google and Meta don’t just make money from ads on their platforms. They also profit off of the ads you see on other websites because they own most of the little boxes that the ads appear in, as well as the behind-the-scenes technology that goes into managing and targeting the ads to whichever visitor is on the page. That level of control over all sides of a transaction also allows the “duopoly” to demand a greater portion of the revenue the ad generates.

The problem is that Canadian competition authorities lacked the authority to intervene on product pricing or to break up the companies. So, it used the “Australia model,” where tech giants would have to bargain with outlets for the right to have news content on their platforms. The Canadian government presented more of a philosophical argument than direct regulation — if online platforms had news on their platforms, and made money selling ads next to that content, then news outlets were entitled to some of that revenue.

Why it’s needed: Back when the dot-com bubble burst, it didn’t wipe every internet-related company off the map. Some shut down, but others used the opportunity to gain market control — like in the online advertising market. If/when the AI bubble bursts, we could see something similar happen, with the market cornered by a small handful of global companies that could throw their weight around.

Licensing deals have their flaws: AI companies have signed deals with a number of major publishers to access their content. Licensing can be a bit more equitable than buying a product from a duopoly, since there is a fixed term and a chance to re-negotiate terms. But if the AI market consolidates, the tech companies will gain more power in those negotiations. Plus, it doesn’t account for the fact that large AI companies are probably not going to take the time to negotiate with small or local outlets.

If companies keep details of the deals private, it gives developers the upper hand in negotiations, as outlets will be left in the dark as to what content could be worth.

The McGill report says that a legislated bargaining model would be a “starting point” for solving some of the imbalances in the market because they would force big developers to transparently negotiate with publishers of any size, who can organize in a form of collective bargaining.

Copyright fight: Creators and publishers have called for copyright laws to be updated to stop unauthorized AI training on their work, but companies have been arguing to governments in Canada and the U.S. that updates to copyright laws should specify that training counts as fair use.

But making AI training a copyright violation wouldn’t stop all of the damage to news outlets. If a developer continued to do it anyway, publishers would have to sue, a process that would be expensive and time-consuming for larger outlets and something that smaller outlets do not have the resources to pursue.

“Slopsquatting” could be a big security risk for vibe coders

That may be the most obnoxious sentence I’ve ever written.

AI developers are making more tools that make coding easier for both pros and amateurs. But a new report suggests that hackers and other bad actors could take advantage of the fact that these tools still make a lot of mistakes.

Meet the newest, most uncomfortably named hacking tactic: slopsquatting.

Background: “Typosquatting” is an attack where malicious code is named after common typos in the names of popular code libraries or packages. When a program with the typo runs, it pulls in the malware/spyware/backdoor/whatever bad thing a bad actor wants to do.

“Slopsquatting” applies the same principle to AI mistakes instead of human ones. AI-generated code has been known to hallucinate the names of code packages — roughly 20% of the time, according to a study where 576,000 code samples were generated with AI. In that study, 58% of those hallucinations re-appeared at least once, with 43% repeating consistently. The same way typosquatting targets common typos, slopsquatting could target these re-hallucinations for a similar effect.

Roughly 38% of hallucinated package names are also inspired by real ones, making them easier to slip past a human reviewing AI-generated code.

Bad vibes: “Vibe coding” is a buzzword that just means creating a website or app by describing what you want to an AI, instead of writing the code manually. Because this lets amateurs without training develop code, it may be especially susceptible to slopsquatting — the “coders” are less likely to review their code for any mistakes because they wouldn’t recognize them (including a mis-named package) if they saw one.

ALSO WORTH YOUR TIME

A deep dive into why so many AI company logos look like buttholes.

Watch: Scientists didn’t actually bring back dire wolves — but that is probably a good thing.

A lawsuit claims Tesla vehicles use algorithms to exaggerate odometer readings and prematurely void warranties.

An app that claims to let people snitch on undocumented immigrants to ICE is actually a crypto scam.

To get AI crawlers off its back, Wikipedia has released a set of data that’s already optimized for machine learning.

Microsoft even put AI into Paint and Notepad. Here’s how to use it (or turn it off).